I wanted to explore just how good Microsoft’s AI services really are, so I set up Azure Cognitive Services to detect celebrities from Twitter photos and gauge their emotions. Here’s what happened when I pointed Face and Computer Vision APIs at #breakingnews tweets — and how I shaved 12 months off Bill Gates’ age. Microsoft gives you a 12 month Azure free trial and so I thought I would have a play with some of their Cognitive Services API’s.

I set up both the Face and Computer Vision Tools. The Face tool has over 1 Million people matched and has the ability to recognize gender, age, and emotion, this gave me an idea to look for celeb photos on Twitter with hashtag #breakingnews to see how accurate it was at identifying celebs and how well it gauged their emotions.

Setting Up Cognitive Services in Azure

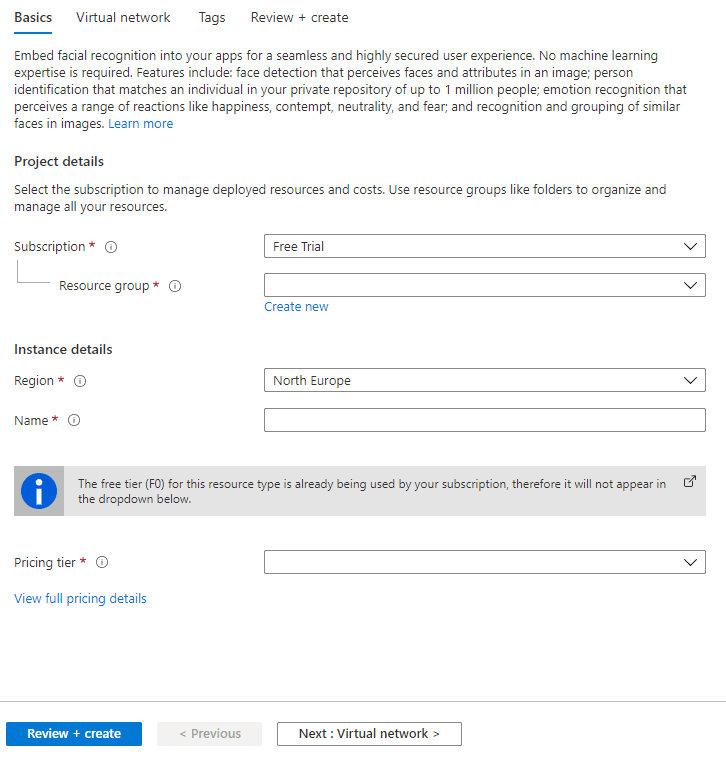

Setting up the services was done via the cognitive services area of Azure. You are able to search the marketplace for the tool required and in this case I searched and created both Face and Computer Vision. The wizard for each was pretty straighforward. Create a resource group, choose a region, create a unique name for your instance (which will be included in your HTTP API Url) and the pricing tier of which there is a free one.

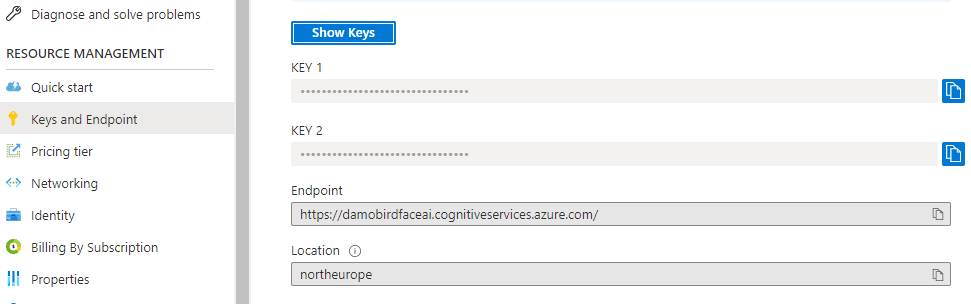

Very quickly your API is up and running and you are presented with a quick start guide with C# and Python examples. I took my idea to PowerAutomate and grabbed a copy of my EndPoint URL and one of the access keys.

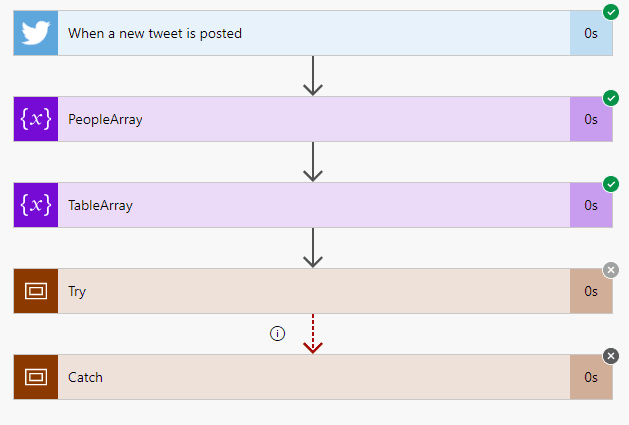

Building the Power Automate Flow

So, I chose to limit the trigger of the automate by using a trigger condition where the MediaUrls entity was not empty, hopefully ensuring that any tweet retrieved by this trigger would be limited to those with a photo included.

My flow, triggered by hashtag #breakingnews where the following trigger condition was met

@not(empty(triggerOutputs()?[‘body/MediaUrls’]))

I also used two arrays to store people data from the massive body of data retrieved from the API calls as well as an Array to format data for a HTML table to summarize the age/gender and emotion data for the people found by the Face calls.

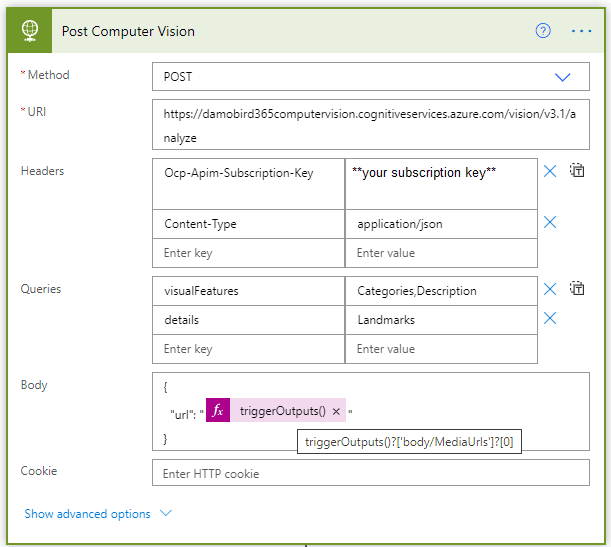

Calling the Computer Vision API

Computer Vision API HTTP POST

After the Computer Vision API had been called on the MediaUrls (of which I limited it to the first element of the MediaUrls object), I then ensured that the body for celebrities and captions were not empty using a condition:

empty(body(‘Parse_JSON’)?[‘categories’]?[0]?[‘detail’]?[‘celebrities’])

empty(body(‘Parse_JSON’)?[‘categories’]?[0]?[‘captions’]?[’text’])

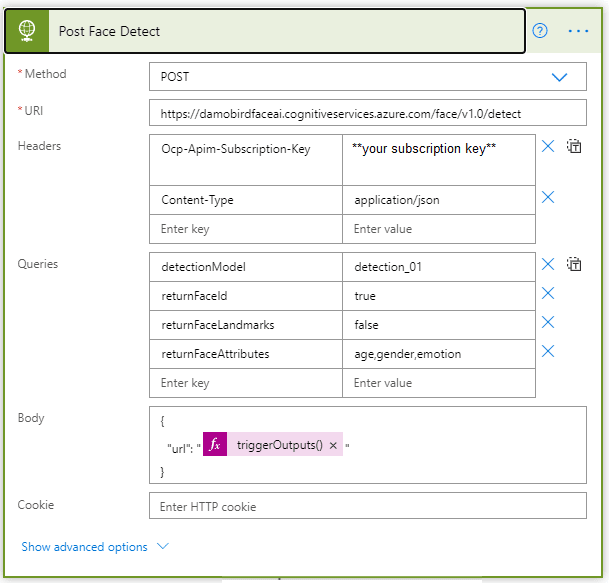

If both conditions were met, I then I run the Face API to detect the Gender, Age and Emotion and output these to my earlier Array.

Running the Face API

Face API HTTP POST

In my first attempt I emailed myself the summary of data captured, but after seeing some of the results I have begun to retweet the image with the data obtained from both API’s.

The Results

/cdn.vox-cdn.com/uploads/chorus_image/image/66666566/1185999102.jpg.0.jpg)

“text”: “Bill Gates wearing glasses” | Photo by Mike Cohen/Getty Images for The New York Times

"name": "Bill Gates"

"confidence": 0.9973201155662537

"gender": "male"

"age": 62,

"emotion": {

"anger": 0

"contempt": 0

"disgust": 0

"fear": 0

"happiness": 0

"neutral": 0.649

"sadness": 0

"surprise": 0.35

}

The above photo was taken in 2019 when Bill was 63, so he will be pleased to see the AI thinks he looks a year younger.

If you have arrived here as a result of one of the automatic tweets sent by my Microsoft #PowerAutomate routine and you would like to know more or have the tweet removed, please get in touch below.